Prioritizing Fundamental Capabilities for the Intelligence Age

What science & technology should we steer towards?

Introduction: Faster Times

Since Convergent Research launched as a non-profit in 2021, AI has advanced at a speed that has surprised many experts.

While we don’t know what will happen, it’s our view that the next two decades – a relevant timeline for many mid-to-long range R&D projects – will likely overlap with an “AI transition” in which AI systems come to transform much of science, the economy, and society

So we have been trying to ask ourselves: what does it mean to do high-impact science as we head into this transition period?

How we do science will certainly change if AIs become more powerful and widespread: some research avenues that once seemed impossibly difficult will likely become more tractable and much cheaper; others will almost certainly be made redundant by AI-driven automation; still others will need additional resources and development for us to leverage the opportunities presented by this wave of technologies.

How can we identify what capabilities will matter most in an increasingly intelligence-saturated world?

At Convergent, we think it is possible to shape the trajectory of science and technology by strategically identifying and launching projects that unlock progress at critical leverage points.

Since 2021, and alongside the current explosion in AI, we’ve been building a launch platform for new organizations – FROs, or Focused Research Organizations – engineered to break through bottlenecks in science and engineering.

In this post, we’d like to share our thinking about how we’ve been incorporating the strategic landscape of AI into our FRO roadmapping process.

(Other organizations across the ecosystem have been asking this question too; we participated in - and read with great interest the other contributions to - IFP’s Launch Sequence essay collection, released yesterday. We link to relevant proposals from that collection, as well as other resources, throughout this post.)

Directed Science

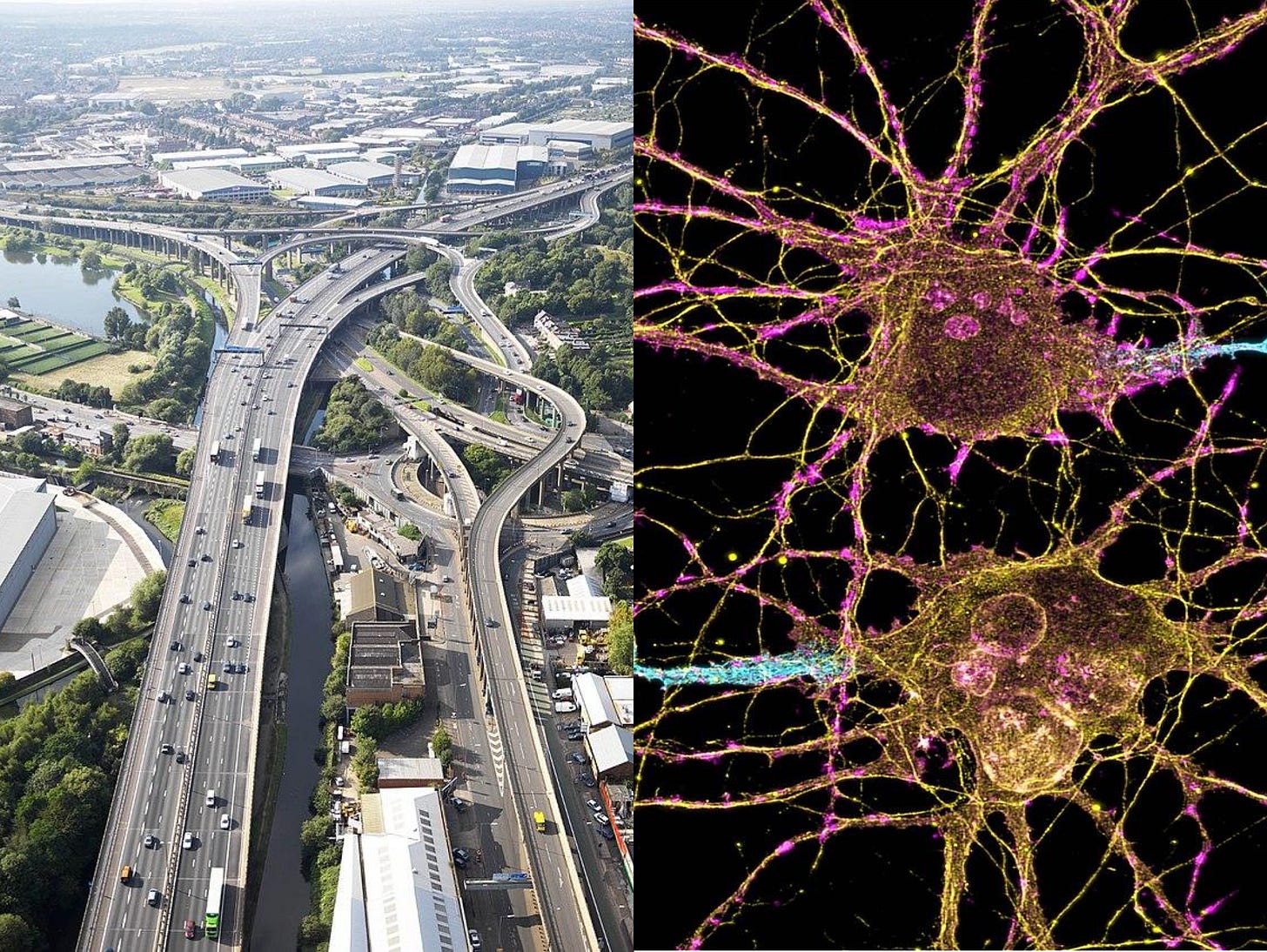

Some advances, like DNA sequencing or the transistor, act as keystone developments that unlock entire fields of progress downstream. (Some economists have called the most powerful of these “general purpose technologies” – the original GPTs – which drive “whole eras of technical progress and growth”.) By identifying these crucial leverage points and hastening their development, we believe we can influence the trajectory of science and technology. We believe that, through careful roadmapping, we can identify core bottlenecks to progress in fields of science and technology, as well as the fundamental capabilities that could bridge those gaps.

But Convergent isn’t just a think tank for scientific roadmapping.

Think of us as a mission control for frontier technology. We launch, support and oversee missions in the form of coordinated teams to build the critical infrastructure to bridge those gaps. To date, our scientific bottleneck analysis and roadmapping led us to launch FROs like E11 Bio, Forest Neurotech and Lean FRO, each of which is unlocking a major scientific bottleneck that we believe is pivotal during a period of rapidly advancing AI.

New types of scientific institutions offer an opportunity to pursue directed R&D that rapidly responds to a changing technological and societal landscape. This is reflected in the White House’s recent report on AI, “Winning the Race: America’s AI Action Plan”, which noted that “Focused Research Organizations… using AI… to make fundamental scientific advancements” should be a key implementational strategy for federal investments in AI-enabled science in America.

Broad AI Acceleration and Its Implications for Research

The rapid advance of AI changes how we should think about prioritization for such directed efforts. Many traditional research projects are built on implicit assumptions about the speed and cost of human effort, about how the economy responds to scientific advances, and about the broader context that determines the relative benefits and risks of new technologies. When AI becomes a dominant force, these assumptions need to be revisited.

As we consider the path ahead, four principles guide our approach to scientific and technological development during this period:

Avoid fast obsolescence — Technological advances may render existing research directions, tools, or methods obsolete much sooner than expected. For example, many traditional analyses of critical global challenges assume slow technological progress, but in an AI-accelerated world, these timelines could collapse. Under conventional assumptions, solving climate change might focus on incremental improvements in renewable energy deployment, but if AI drastically accelerates materials discovery, new primitives like room-temperature superconductors could emerge far sooner than expected, reshaping the option space. Similarly, in software infrastructure, efforts to manually refactor legacy climate modeling codebases - once considered decade-long, expensive and difficult-to-organize projects - could become straightforward due to AI-powered software engineering. Don’t rely on fragile assumptions about the future.

Complement AI — As AI capabilities grow, some research areas will become even more critical. The key here is to identify work that AI cannot easily replace but instead depends on humans for, which may quickly become the bottleneck. Even superhuman reasoning cannot substitute for measuring contingent facts about the universe or about biology - so projects like mapping biological dark matter (i.e., new kinds of previously-unseen biological data layers, like classes of molecules we haven’t been able to systematically map before), developing new observational tools to do so, and generating high-quality empirical datasets can become even more valuable. Here, the highest-impact work could lie in creating the foundational inputs AI needs to function, and giving AI the tools to do creative science, as Ben Reinhardt describes in “Teaching AI How Science Actually Works: How block-grant labs can generate the real-world data AI needs to do science”; Cultivarium’s PRISM tool is a prototype example of this type of work. These kinds of large-scale scientific efforts remain crucial.

Use the steering wheel, not just the pedals — We believe that we have a relatively narrow window to steer technological progress in beneficial directions - not just in AI itself, but in the surrounding fields. We believe right action during this critical window will shape many of the enduring impacts of this technology. This means that advancing “defensive” technologies faster today could spell the difference between stability and chaos in an accelerated world. We’ve previously made reference to “differential technological development” and “defensive (or decentralization, or differential) acceleration”; this principle says that we must consider whether the technologies we are accelerating are defense-dominant or not, and that we ought to steer new technology development away from equilibria in which technology systems that impinge upon human wellbeing negatively can proliferate unchecked. (See, for example, Miles Brundage’s ‘Operation Patchlight: How to leverage advanced AI to give defenders an asymmetric advantage in cybersecurity’ essay, on this topic.)

For example, robust biosecurity capabilities - like the ability to do universal pathogen detection - become far more urgent as AI accelerates the ability to design synthetic viruses. (And note that the major AI labs have recently activated their safety contingencies for bioweapons production ‘uplift’.) Similarly, provably secure cyber-physical systems will be essential if innovations in AI supercharge the deployment of offensive cyber-weapons – yet AI can also be used to accelerate the development of provable security measures.

We also need to unlock faster progress in lagging scientific domains like neuroscience, which could make it possible to study the neural basis of human value formation at an unprecedented level. This is work that could prove pivotal for AI alignment in the medium term and for the role of humans through brain computer interfaces and other neurotechnologies, as we argue in this proposal released by the Institute for Progress yesterday.

At the same time, the explosion of AI-generated content and the difficulty that democratic societies have in keeping pace will make our ‘epistemic resilience’ more critical than ever. Building tools that support robust human reasoning, self-governance, scientific synthesis, fact checking, and democratic participation will shape our ability to navigate this transition with our values intact. We believe that building such tooling (across areas like defensive capabilities, epistemic resilience, and democratic coordination) rapidly is a critical domain for future FROs and other coordinated research programs. Now is the time to invest in the foundations of a world in which AI can amplify beneficial resilience measures.Imagine good futures — In times of rapid change, it would do us well to expand our creative toolkit and imagination without being overly anchored on what was previously considered possible. We should pursue high-variance projects that could be transformative in a rapidly evolving world (even if their immediate benefits in today’s world may be meaningful but more modest). Just as Xerox PARC not only invented modern computing but also pioneered new forms of human-computer interaction, we should invest today in research that expands the range of human agency in ways we can’t yet fully predict. Can AI help mathematicians solve the Riemann Hypothesis? What are the design principles of new interfaces by which we can digitize and transmit elements of human mental imagery and experience? Can we create new forms of scientific exploration, like a real world Sim City for the global economy. Can we design new systems for revitalizing ecosystems that have been previously thought unrecoverable? Many doors may be unexpectedly unlocked, so rather than just banging against the scarce few that have historically seemed like they might budge, giving long-range thought to which doors we might most want to open and where we might go on the other side has renewed value.

Steering under Acceleration

So far, Convergent Research has launched almost a dozen FROs across biology, neuroscience, astronomy, software infrastructure for formal mathematics, and climate. As we enter the next phase of our work, our goal continues to be the generation of strong counterfactual impact by directing scientific and technological progress in beneficial ways.

Research Priorities for the Next Phase of Convergent

Based on our principles, we believe that the following areas are poised for outsized counterfactual impact. Over the coming months, we hope to engage scientists, funders, and policymakers in exploring, refining, and pursuing them.

Neuroscience and Brain-Computer Interfaces

The importance of understanding the brain is overdetermined. As we explored with E11’s Andrew Payne in “Mapping the Brain for Alignment: How to map the mammalian brainʼs connectome to solve fundamental problems in neuroscience, psychology, and AI robustness”, better understanding the brain can impact our ability to design safer AIs, tackle massive amounts of suffering experienced by people around the world, create low energy chips, and much else.

Neuroscience for AI Safety – Understanding how learning in the brain is steered by innate, evolved brain systems could inform AI alignment, which faces an analogous steering problem. We recently participated in a roadmapping exercise in this area and plan to deepen our work, in addition to the Launch Sequence essay above.

BCIs – A closer integration between brains and computers may be needed to ensure that human intentionalities remain central in an AI-dominated world. We’ve already launched Forest Neurotech, a transformative BCI FRO and we see additional FRO opportunities in this space.

Fundamental Brain Mapping and Modeling – Progress in functional connectomics could help explain how rationality, irrationality, and ethics emerge from the brain, and could let us explore new possibilities for AI based on more direct brain simulation. This could include:

Connectomics in the live brain (our FRO E11 Bio is scaling our ability to map static connectomes)

Simulating entire small nervous systems digitally – and the new kinds of datasets that might enable this

Neural Basis of Consciousness – Understanding consciousness may become critical if AI systems approach sentience. While early-stage, progress in neuroscience is needed to have a chance at unlocking this.

Revealing Biological and Ecological Dark Matter

As we discussed above, AI’s impact in science depends on the availability of high-quality data, yet many crucial biological and environmental domains remain largely unmapped. Creating better measurement devices and analytical tools to help bring these areas of functional importance into view is the kind of effort that may be well-suited to FROs.

An example from biology: Glycomics – Glycoproteins (sugar molecules that decorate cells, viruses, and antibodies) are fundamental to biology, yet while we have genomics and proteomics, we lack an equivalent field of glycomics to systematically study them.

An example from climate: Non-CO₂ Greenhouse Effects – Key climate processes, such as nitric oxide emissions and aerosol-cloud interactions, remain poorly characterized, limiting our ability to model and respond to climate change.

And even more new observational tools – unlocking biological dark matter could involve research directions like:

Magnetic sensing and control of biological systems – a new type of control knob on biology that is just beginning to be unearthed

Quantum biology – what is the role of the electron spin dimension in biology, see this non-Convergent project

Foundation datasets for new modalities like metabolomics, lipidomics and glycomics that haven’t previously seen the kind of investment genomics has (we’ve been starting here with PTI, our FRO on proteome-wide and proteoform-resolved single-cell proteomics)

Collecting data from unusual organisms/species (building on our work with Cultivarium)

4D intact tissue cell observatories – see Nobel Prize Winner Eric Betzig’s take on a FRO shaped project

AI for Epistemics

The world is changing faster than ever, and humans augmented by AI systems (or AI systems acting fairly autonomously) will be capable of generating overwhelming amounts of information, some of which will be dangerously misleading or difficult to interpret. Even today, many channels of information exchange are clogged with AI slop generated by actors motivated by platform and market incentives. Better epistemic infrastructure is needed to improve human reasoning, coordination, and truth-seeking. Projects in this area that we’re excited about include:

AI for Scientific Fact-Checking – Automated error or fraud detection in scientific literature, improving reproducibility and statistical design of studies.

“System 2” Recommenders – Imagine a feed powered by your long-term goals, not just your immediate engagement. Perhaps AI-powered recommendation systems that help audit your existing feed, and suggest content that you will view as valuable upon reflection while reducing your propensity to be stuck in a filter bubble could be produced as a public good.

Knowledge Synthesis and Verification –:

How can AI-generated knowledge become more productive and cumulative?

What should a Wikipedia that is shared by humans and machines look like?

Human-in-the-loop Fact-Checking – Automating community-driven misinformation checks, surfacing divergent perspectives to reduce polarization.

AI for Strategic Decision-Making – AI tools for geopolitical strategy, economic modeling, and coordination in high-stakes scenarios.

AI tools for scaling deliberative democracy – Tools to enable group decision making and collective intelligence

Provably Secure and Safe Cyber-Physical Systems

Many risks from AI misuse or loss of control manifest through cyber-physical systems in which AIs can digitally effectuate changes to the real world —whether in cybersecurity, biotechnology, or supply chains. Formal verification and hardware-based constraints could provide provably defense dominant security infrastructure necessary to prevent AI-enabled exploits. (This builds on our prior work on formal verification for math with the Lean FRO. See also: ‘The Great Refactor’ by Herbie Bradley & Girish Sastry.) Examples of projects to diligence could include:

Hardware Locks for DNA Synthesis – Preventing unauthorized synthesis of dangerous pathogens.

Secure Compute Governance – FlexHEG and other cryptographically enabled mechanisms for controlling how and where AI runs at the hardware level. (See also: “Faster AI Diffusion Through Hardware-Based Verification: How to use privacy-preserving verification in the AI hardware stack to build trust and limit misuse” by Nora Ammann and David ‘davidadʼ Dalrymple.)

Formal Verification of Contracts and AI Behavior – Borrowing from formal verification in math (e.g., Lean) to develop provably compliant governance mechanisms.

Privacy-Preserving AI Enclaves – Enabling local, secure AI processing without unnecessary data exposure.

Structured transparency systems – Creating a larger negotiating space for coordination, assurance, auditing and other selective information sharing actors dealing with advanced technologies. (We’re looking forward to Andrew Trask and Lacey Strahm’s forthcoming essay related to this, “Unlocking a Million Times More Data for AI”.)

Automating Secure Software Development – AI-driven workflows for producing formally verified, bug-free software at scale, to improve security and make software defense dominant.

Defining the Technological Basis of Post-Scarcity

New research directions could prototype technology for a world of material post-scarcity by providing programmable control over matter at the atomic and molecular scale. This could change both what is materially possible for us to manufacture and grow in the short-term, and help calibrate our planning for how AI-enabled science development could open up a future with much broader shared material prosperity.

Modular Molecular Legos – While AI-driven protein design has advanced medicine and biomanufacturing, it has largely been within the rubric of biological templates. What if we created programmable, Lego-like molecular structures (and mechanisms for assembling them) for nonorganic advanced material production and compositional fabrication?

AI-Driven Materials Science – Self-driving labs and AI-guided search for room-temperature superconductors and other transformative materials.

Programming Developmental Biology – The AI-driven modeling of cells and tissues could extend the tools of synthetic biology from single cells to complex organism development. (Could we engineer “brainless cows” to produce meat without factory farming? Could mushrooms be grown with the taste and texture of filet mignon?)

Enabling Autarky - More prosaically, how self-contained and self-generating can our manufacturing processes and supply chains become, for applications ranging from space colonization to civilizational resilience? Can AI help us preserve and rapidly propagate tacit knowledge in practical manufacturing, materials, research techniques and other critical areas where human knowledge is scarce and vulnerable? Can bioengineering help us turn waste into robustly domestically produced medicines? Can we make food without agriculture?

Complex Systems Economics

AI may drive large-scale and rapid changes to labor markets and global production, but economic modeling remains hamstrung in gaming out the multifarious impacts of these shifts. Moving toward agent-based simulations and more data-driven, fine-grained economic modeling could allow better policy design.

Complexity Economics – Developing AI-powered, agent-based, out-of-equilibrium models of the economy, moving beyond traditional econometrics or simplified analytically tractable economic models. This could build on the work of Doyne Farmer.

Simulating AI-Led Economic Transitions – A “real-world SimCity” to model AI-driven economic and labor shifts.

New Economic Data Collection Methods – High-resolution tracking of economic behavior to train better economic models and improve decision-making.

Defensive Biosecurity Technologies

AI-driven advances in biotechnology raise the risk of engineered pathogens and of broader proliferation of offensive biotechnology, requiring rapid development of more universal, proactive biosecurity measures. Topics we have explored to date at Convergent include:

Pathogen-Agnostic Detection – Low-cost, metagenomic sequencing or other novel physical modalities for detecting arbitrary biological threats before they spread. (See, for example, Simon Grimm’s “Scaling Pathogen Detection with Metagenomics: How to generate the data necessary to reliably detect new pathogen outbreaks with AI”.)

Far-UVC for Air Sterilization – Deploying Far-UVC light to passively sterilize air and reduce pandemic risk. See e.g. this report by Blueprint Biosecurity.

The Road Ahead

Now is an important time to ask ourselves what futures we want to enable, and what this means for research directions today. While there are huge uncertainties about exactly how AI will play out, actively trying to envision and prioritize beneficial R&D directions – including ones that might previously have seemed outlandish – and bring about the coordination needed to approach them systematically is a muscle worth building, even as the resulting plans will need frequent revising.

What key directions and possibilities are we missing?

Acknowledgements: Thanks to Eric Drexler, Evan Miyazono, Steve Byrnes, Matt Botvinick, David Dalrymple, Ed Boyden, Adam Brown, Mary Wang, Ales Flidr, Viv Belenky, Kevin Esvelt, Doyne Farmer and Liana Paris for inspiration and discussions.